Will SB 1047 cover many models?

Something like "Will SB 1047 cover models from more than 10 companies in 2029?" Cover meaning "covered models" as in the bill.

Scott Aaronson

Schlumberger Centennial Chair of Computer Science, University of Texas at Austin

“They say that it will discourage startups, even though the whole point of the $100 million provision is to target only the big players (like Google, Meta, OpenAI, and Anthropic) while leaving small startups free to innovate.”

Shtetl-Optimized – Sep 4, 2024

Zvi Mowshowitz

Commentaor, Don't Worry About the Vase

“What this does do is very explicitly and clearly show that the bill only applies to a handful of big companies. Others will not be covered, at all.”

Don't Worry About the Vase – Jun 5, 2024

Scott Wiener

State Senator, California

“By focusing its requirements on the well-resourced developers of the largest and most powerful frontier models, SB 1047 puts sensible guardrails in place against risk while leaving startups free to innovate without any new burdens.”

California Senate – May 20, 2024

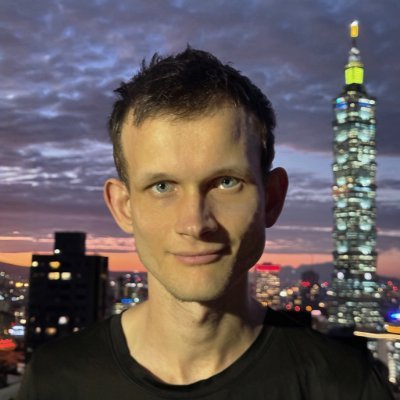

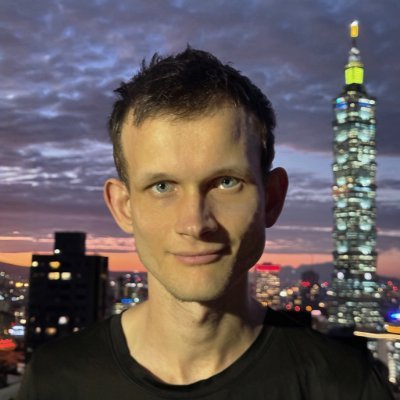

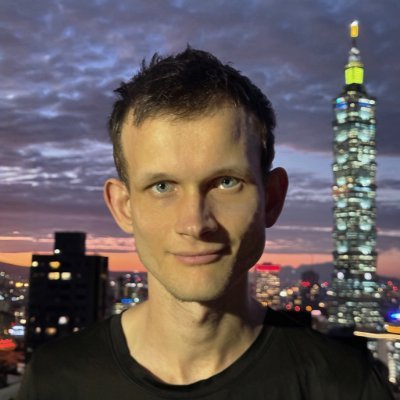

Vitalik Buterin

Founder, Ethereum

“The dollar-based covered model definition removes the "it's a slippery slope to covering everything" concern”

X – Aug 8, 2024

Simon Last

Co-founder, Notion

“California’s SB 1047 strikes a balance between protecting public safety from such harms and supporting innovation, focusing on common sense safety requirements for the few companies developing the most powerful AI systems.”

The LA Times – Aug 7, 2024

Manifold Markets

Prediction market median, Manifold Markets

“If passed, will SB 1047 "cover" models/fine-tuned models from more than 10 organisations in 2029? - 55%”

Manifold Markets – Sep 24, 2024

Andreessen Horowitz

Company, Venture Capital

“So this threshold would quickly expand to cover many more models than just the largest, most cutting-edge ones being developed by tech giants ... this $100 million amendment to train might seem like a reasonable compromise at first, but when you really look at it, it has the same fundamental flaws as the original FLOP threshold.”

a16z – Jun 18, 2024

Danielle Fong

CEO, Lightcell Energy

“Will SB 1047 cover many models?... I think this is highly likely... any frontier model wanting to compete at the raw compute level with @xAi in 2025, by 2029, will surpass the compute threshold and probably the dollar threshold. It seems highly likely that all of the hyperscalers reach this multiple times (OpenAI, Google, Anthropic, Meta).”

X – Sep 2, 2024

Dean W. Ball

Research Fellow, George Mason University

“SB 1047 will almost certainly cover models from more than 10 companies in 2029. ... the bill is not just about language models ... [also] we really have no idea how the bill's "training cost" threshold will be calculated in practice”

X – Sep 2, 2024

Will SB 1047 reduce the named harms?

Quoting the bill, these are: "Mass casualties or at least five hundred million dollars ($500,000,000) of damage"

Fei-Fei Li

Professor, Stanford

“SB-1047 will unnecessarily penalize developers, stifle our open-source community, and hamstring academic AI research, all while failing to address the very real issues it was authored to solve.”

Fortune – Aug 5, 2024

Andreessen Horowitz

Company, Venture Capital

“The practical effect of these liability provisions will be to drive AI development underground or offshore.”

a16z – Jun 18, 2024

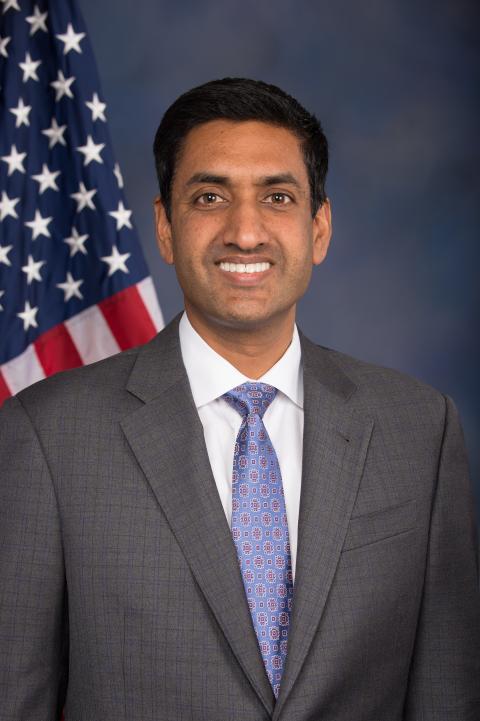

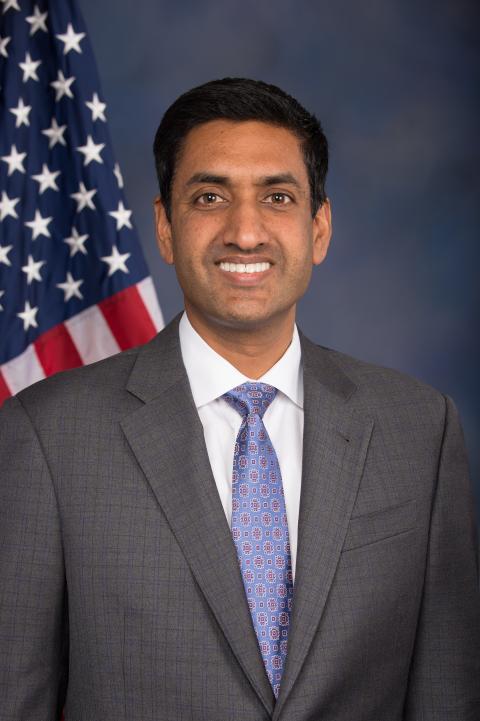

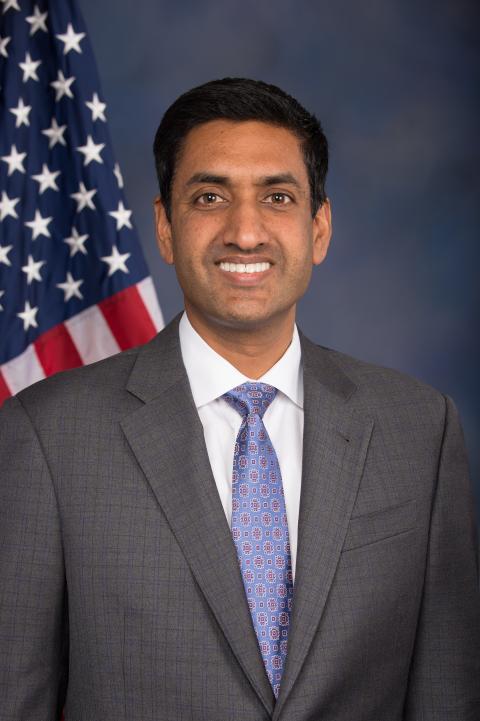

Ro Khanna

House Representative, US

“[I] appreciate the intention behind SB 1047 but am concerned that the bill as currently written would be ineffective, punishing of individual entrepreneurs and small businesses, and hurt California’s spirit of innovation.”

Ro Khanna – Aug 12, 2024

Yann LeCunn

Chief Scientist, Meta

“Premature regulation of artificial intelligence will only serve to reinforce the dominance of the big technology companies and stifle competition... Regulating research and development in AI is incredibly counterproductive”

Financial Times – Oct 18, 2023

Andrew Ng

Adjunct faculty, Stanford

“The bill is deeply flawed because it makes the fundamental mistake of regulating a general purpose technology rather than applications of that technology.”

Time – Aug 28, 2024

Vitalik Buterin

Founder, Ethereum

“The charitable read of the bill is that the (medium-term) goal is to mandate safety testing, so if during testing you discover world-threatening capabilities/behavior you would not be allowed to release the model at all.”

X – Aug 26, 2024

Zvi Mowshowitz

Commentaor, Don't Worry About the Vase

“It seems like a good faith effort to put forward a helpful bill. It has a lot of good ideas in it. I believe it would be net helpful.”

Don't Worry About the Vase – Feb 12, 2024

Lawrence Lessig

Professor, Harvard

“Governor Newsom will have the opportunity to cement California as a national first-mover in regulating AI. Legislation in California would meet an urgent need.”

Time – Aug 6, 2024

Geoffry Hinton

Winner, Turing Award

“Powerful AI systems bring incredible promise, but the risks are also very real and should be taken extremely seriously. SB 1047 takes a very sensible approach to balance those concerns.”

Scott Wiener press release – Aug 14, 2024

Gabriel Weil

Professor, Touro Law Center

“The rules imposed by SB-1047 on frontier AI model developers are reasonable. Failure to comply with them should be discouraged through the sort of appropriate penalties included in the bill.”

Lawfare – May 7, 2024

Simon Last

Co-founder, Notion

“California’s SB 1047 strikes a balance between protecting public safety from such harms and supporting innovation”

LA Times – Aug 7, 2024

Scott Wiener

State Senator, California

“SB 1047 is legislation to ensure the safe development of large-scale artificial intelligence systems by establishing clear, predictable, common-sense safety standards for developers of the largest and most powerful AI systems.”

Scott Wiener – Aug 14, 2024

Holly Elmore

Executive Director, Pause AI US

“We need you to help us fight for SB 1047, a landmark bill to help set a benchmark for AI safety, decrease existential risk, and promote safety research”

LessWrong – Aug 11, 2024

Is SB 1047 going to hamstring AI development?

At least, "Will 2 or more AI companies significantly shift their operations from California and give SB 1047 as the reason for doing so?" but other things too.

Jan Leike

Co-lead of the Alignment Science team, Anthropic

“The FUD around impact on innovation and startups doesn't seem grounded: If your startup spends >$10 million on individual fine-tuning runs, then it can afford to write down a safety and security plan and run some evals.”

X – Sep 5, 2024

Scott Aaronson

Schlumberger Centennial Chair of Computer Science, University of Texas at Austin

“While the bill is mild, opponents are on a full scare campaign saying that it will strangle the AI revolution in its crib, put American AI development under the control of Luddite bureaucrats, and force companies out of California.”

Shtetl-Optimized – Sep 4, 2024

Simon Last

Co-founder, Notion

“As a startup founder, I am confident the bill will not impede our ability to build and grow. ... Others claim the legislation would drive businesses out of the state. That’s nonsensical.”

The LA Times – Aug 7, 2024

Elon Musk

CEO, Tesla

:max_bytes(150000):strip_icc()/GettyImages-1258889149-1f50bb87f9d54dca87813923f12ac94b.jpg)

“This is a tough call and will make some people upset, but, all things considered, I think California should probably pass the SB 1047 AI safety bill.”

X – Aug 25, 2024

Geoffry Hinton

Winner, Turing Award

“AI systems bring incredible promise, but the risks are also very real and should be taken extremely seriously. SB 1047 takes a very sensible approach to balance those concerns. I am still passionate about the potential for AI to save lives through improvements in science and medicine, but it’s critical that we have legislation with real teeth to address the risks.”

California Senate – May 20, 2024

Dario Amodei

CEO, Anthropic

“In our assessment the new SB 1047 is substantially improved, to the point where we believe its benefits likely outweigh its costs. However, we are not certain of this, and there are still some aspects of the bill which seem concerning or ambiguous to us.”

Letter from Anthropic – Aug 20, 2024

Dean W. Ball

Research Fellow, George Mason University

“SB 1047 would be a net negative for AI development, but in and of itself I doubt many companies would feel an incentive to leave the state...The broader point is this: SB 1047 will make it seem to everyone--everyone--that California is broadly hostile to AI [development]”

X – Sep 2, 2024

Ro Khanna

House Representative, US

“I agree wholeheartedly that there is a need for legislation and appreciate the intention behind SB 1047 but am concerned that the bill as currently written would be ineffective, punishing of individual entrepreneurs and small businesses, and hurt California’s spirit of innovation”

Ro Khanna (personal website) – Aug 12, 2024

Danielle Fong

CEO, Lightcell Energy

“There's a good chance for this, but it's complex...[SB 1047] will hamstring development, but it won't primarily drive it elsewhere -- it will primarily be negative sum, and primarily on the open source ecosystem. The main pressure will be on preventing people from releasing open weights, imo.”

X – Sep 2, 2024

Yann LeCunn

Chief Scientist, Meta

“- Making technology developers liable for bad uses of products built from their technology will simply stop technology development. - It will certainly stop the distribution of open source AI platforms, which will kill the entire AI ecosystem, not just startups, but also academic research.”

X – Jun 5, 2024

Andrew Ng

Adjunct faculty, Stanford

“It defines an unreasonable “hazardous capability” designation ... (such as causing $500 million in damage)... If the bill is passed in its present form, it will stifle AI model builders, especially open source developers.”

X – Jun 5, 2024

Fei-Fei Li

Professor, Stanford

“I’m deeply concerned about California’s SB-1047, Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. While well intended, this bill will not solve what it is meant to and will deeply harm #AI academia, little tech and the open-source community.”

X – Aug 5, 2024

Andreessen Horowitz

Company, Venture Capital

“Most startups will simply have to relocate to more AI-friendly states or countries, while open source AI research in the U.S. will be completely crushed due to the legal risks involved”

a16z – Jun 18, 2024

Will SB 1047 cover Open Source models soon?

At least: "Will SB 1047 cover any Open Source model before 2029, not including those of Meta, OpenAI, Anthropic?" "Cover" here means the "covered model" definition, including models and fine-tuning. Maybe other outcomes also.

Vitalik Buterin

Founder, Ethereum

“the new "full shutdown" definition (copies under your control only) removes the "it de-facto bans open source" concern (also, the $100m threshold is so high that it's quite possible no one will try open-sourcing something above-threshold anyway)”

X – Aug 8, 2024

Manifold Markets

Prediction market median, Manifold Markets

“If passed, will SB 1047 cover any Open Source model before 2029, not including those of Meta, OpenAI, Anthropic, etc? - 34%”

Manifold Markets – Aug 31, 2024

Gabriel Weil

Professor, Touro Law Center

“I can read legislative text and it's clear to me that SB 1047 bends over backward (too much in my view) to accommodate open source and takes an overall light-touch approach to AI regulation.”

X – Aug 7, 2024

Dean W. Ball

Research Fellow, George Mason University

“I am less certain about this, because SB 1047 itself may change the calculus about whether or not to open-source a model! If the question was instead "will SB 1047 cover a model that would have been open source but for SB 1047?" my answer would most surely be yes...Thus I lean slightly, but far from strongly, toward yes on this one.”

X – Sep 2, 2024

Danielle Fong

CEO, Lightcell Energy

“This seems highly likely. Even outside of Meta, international teams such as Mistral and teams training on fair use works in permissive legal regimes such as Japan are likely to be made... the inability to fully lock down open weight models might combine with liability to produce a chilling effect... On the other hand, Meta and others may push through anyway... It is very likely to slow open source releases, though.”

X – Sep 2, 2024

Andrew Ng

Adjunct faculty, Stanford

“If the bill is passed in its present form, it will stifle AI model builders, especially open source developers.”

X – Jun 5, 2024

Andreessen Horowitz

Company, Venture Capital

“open source AI research in the U.S. will be completely crushed due to the legal risks involved”

a16z – Jun 18, 2024

Yann LeCunn

Chief Scientist, Meta

“- Making technology developers liable for bad uses of products built from their technology will simply stop technology development. - It will certainly stop the distribution of open source AI platforms, which will kill the entire AI ecosystem, not just startups, but also academic research.”

X – Jun 5, 2024

Will whilsteblowers be protected once SB 1047 is passed?

Zvi Mowshowitz

Commentaor, Don't Worry About the Vase

“Section 2207 is whistleblower protections. Odd that this is necessary, one would think there would be such protections universally by now? There are no unexpectedly strong provisions here, only the normal stuff.”

Don't Worry about the Vase – Feb 12, 2024

Sheppard Mullin Richter & Hampton

Company, Law firm

“These protections reflect a growing demand from workers for more robust whistleblower laws in an ever-growing AI industry which evolves much more rapidly than existing regulations have the ability to.”

Mondaq – Sep 11, 2024

Geoffry Hinton

Winner, Turing Award

“Ordinary whistleblower protections are insufficient because they focus on illegal activity, whereas many of the risks we are concerned about are not yet regulated.”

Open Letter – Jun 3, 2024

Safe and Secure AI innovation

Company, Lobby Group

“[Existing legislative efforts] don’t protect whistleblowers... It is perfectly clear that they are not a substitute for SB 1047”

Safe & Secure AI Blog – Aug 22, 2024

Morgan Lewis

Company, Law firm

“Developers of covered models cannot prevent an employee from or retaliate against an employee for disclosing the developer’s noncompliance with the bill to the AG or Labor Commissioner or that the AI model is unreasonably dangerous.”

Morgan Lewis – Aug 28, 2024

Simon Last

Co-founder, Notion

“It includes whistleblower protections for employees who report safety concerns at AI companies, and importantly, the bill is designed to support California’s incredible startup ecosystem.”

LA Times – Aug 7, 2024

Scott Wiener

State Senator, California

“SB 1047 balances AI innovation with safety by: ... Creating whistleblower protections for employees of frontier AI laboratories”

Scott Weiner – Aug 14, 2024

Scott Aaronson

Schlumberger Centennial Chair of Computer Science, University of Texas at Austin

“It establishes whistleblower protections for people at AI companies to raise safety concerns.”

Shtetl-Optmized – Sep 3, 2024

Scott Aaronson

Schlumberger Centennial Chair of Computer Science, University of Texas at Austin

“It establishes whistleblower protections for people at AI companies to raise safety concerns.”

Scott Aaronson Blog – Sep 3, 2024

Will SB 1047 reduce existential risk?

Including some notion of whether there is existential risk in the first place.

Yann LeCunn

Chief Scientist, Meta

“The debate on existential risk is very premature until we have a design for a system that can even rival a cat in terms of learning capabilities, which we don’t have at the moment”

Financial times – Oct 18, 2023

Andrew Ng

Adjunct faculty, Stanford

“Unfortunately, by raising compliance costs for open source efforts, this will discourage the release of open models, and make AI less competitive and less safe.”

Time – Aug 28, 2024

Andreessen Horowitz

Company, Venture Capital

“What we really need is more investment in defensive artificial intelligence solutions...not slowing down the fundamental innovation that can actually unlock those defensive applications.”

a16z – Jun 18, 2024

Ro Khanna

House Representative, US

“Regulation aimed at CBRN risks should focus on restricting the physical tools needed to create these physical threatts, rather than restricting access to knowledge that is already available through non-AI means.”

Open Letter – Aug 14, 2024

Fei-Fei Li

Professor, Stanford

“This bill does not address the potential harms of AI advancement.”

Fortune – Aug 5, 2024

Zvi Mowshowitz

Commentaor, Don't Worry About the Vase

“My worry is that this has potential loopholes in various places, and does not yet strongly address the nature of the future more existential threats. If you want to ignore this law, you probably can.”

Zvi Mowshowitz Substack – Feb 12, 2024

Scott Wiener

State Senator, California

“But with every powerful technology comes risk. [This bill] is not about eliminating risk. Life is about risk. But how do we make sure that at least our eyes are wide open? That we understand that risk and that if there’s a way to reduce risk, we take it.”

Vox – Jul 18, 2024

Gabriel Weil

Professor, Touro Law Center

“This legislation represents an important first step toward protecting humanity from the risks of advanced artificial intelligence (AI) systems.”

LawFare – May 7, 2024

Simon Last

Co-founder, Notion

“SB 1047 supplies what’s missing: a way to prevent harm before it occurs.”

LA Times – Aug 7, 2024

Yoshua Bengio

Winner, Turing Award

“I believe this bill represents a crucial, light touch and measured first step in ensuring the safe development of frontier AI systems to protect the public.”

Fortune – Aug 14, 2024

Holly Elmore

Executive Director, Pause AI US

“We need you to help us fight for SB 1047, a landmark bill to help set a benchmark for AI safety, decrease existential risk, and promote safety research”

LessWrong Blog – Aug 11, 2024